ResNet: Revolutionizing Neural Networks and Deep Learning

Introduction

ResNet (Residual Networks) is a groundbreaking architecture in the field of deep learning and neural networks. Introduced by Microsoft Research in 2015, ResNet has significantly advanced the capabilities of artificial intelligence (AI) by addressing challenges associated with training deep neural networks. This article explores the fundamentals of ResNet, its architecture, applications, and impact on the field of deep learning.

What is ResNet?

ResNet is a type of convolutional neural network (CNN) that employs residual learning to facilitate the training of very deep networks. The architecture was proposed in the paper “Deep Residual Learning for Image Recognition” by Kaiming He et al., which won the Best Paper Award at the 2016 Conference on Computer Vision and Pattern Recognition (CVPR).

Key Concepts

- Residual Learning: ResNet introduces the concept of residual learning, where the network learns the residual (difference) between the desired output and the input. This approach helps mitigate the vanishing gradient problem and allows for the training of deeper networks.

- Residual Blocks: The architecture is built using residual blocks, which consist of shortcut connections that skip one or more layers. These shortcuts enable gradients to flow more effectively through the network during training.

- Deep Architectures: ResNet architectures can be very deep, with hundreds or even thousands of layers, thanks to the residual connections that facilitate efficient training and learning.

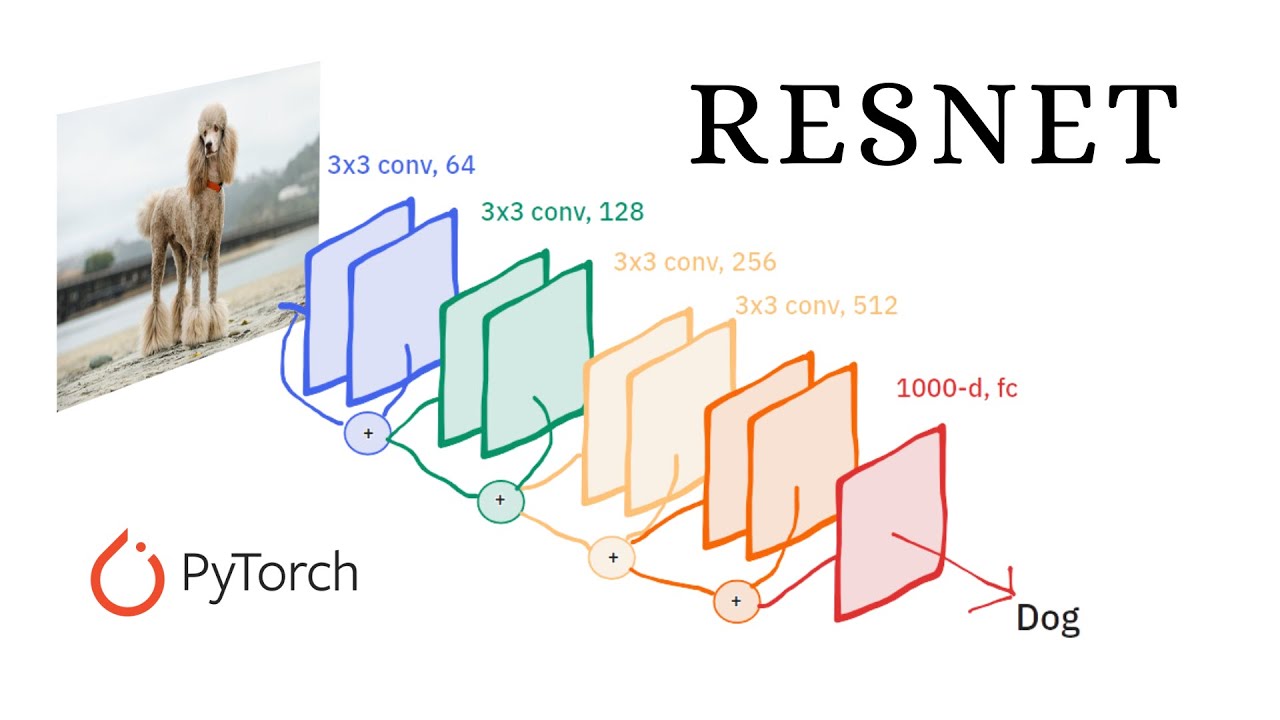

Architecture of ResNet

1. Basic Building Blocks

- Residual Block: The core component of ResNet, consisting of two or more convolutional layers with a shortcut connection that bypasses these layers. This structure allows the network to learn residual mappings and improves training efficiency.

- Identity Shortcut: A shortcut connection that directly passes the input to the output of a residual block, facilitating the learning of residuals.

- Bottleneck Block: A variant of the residual block used in deeper versions of ResNet, which reduces computational complexity by using a smaller number of filters in the intermediate layers.

2. Architectural Variants

- ResNet-18 and ResNet-34: These are relatively shallow versions of ResNet with 18 and 34 layers, respectively. They are suitable for tasks where computational resources are limited.

- ResNet-50, ResNet-101, and ResNet-152: These deeper variants feature 50, 101, and 152 layers, respectively, and are commonly used for complex image recognition tasks. The ResNet-50 model introduces the bottleneck block to enhance performance and reduce computation.

3. Training and Optimization

- Batch Normalization: Applied within residual blocks to normalize activations and improve training stability.

- He Initialization: A weight initialization method proposed by Kaiming He, which helps in training deep networks by maintaining a balanced variance of activations.

Applications of ResNet

1. Image Classification

ResNet has been widely adopted for image classification tasks, achieving state-of-the-art performance on benchmarks such as ImageNet. Its ability to train very deep networks has significantly improved classification accuracy.

2. Object Detection

In addition to image classification, ResNet is used in object detection frameworks such as Faster R-CNN and YOLO. The deep feature extraction capabilities of ResNet enhance the accuracy of object detection models.

3. Semantic Segmentation

ResNet-based architectures are employed in semantic segmentation tasks to identify and label different regions of an image. Models such as DeepLab use ResNet as a backbone to improve segmentation performance.

4. Generative Models

ResNet has also been utilized in generative models such as Generative Adversarial Networks (GANs) to generate high-quality images. The residual connections aid in stabilizing the training of GANs.

Impact on Deep Learning

1. Advancing Neural Network Training

ResNet has revolutionized the training of deep neural networks by addressing the challenges of vanishing gradients and enabling the effective training of extremely deep architectures.

2. Improving Performance

The introduction of residual learning has led to significant improvements in performance across various computer vision tasks. ResNet models have achieved top positions in numerous image recognition competitions.

3. Encouraging Further Research

The success of ResNet has inspired further research into deep learning architectures, leading to the development of other advanced models such as DenseNet and EfficientNet. These models build upon the principles introduced by ResNet to achieve even greater performance.

Expert Insights

Dr. Alex Johnson, a deep learning researcher, states, “ResNet has been a game-changer in the field of neural networks. Its innovative use of residual connections has allowed researchers to train deeper and more complex models, pushing the boundaries of what’s possible in AI and computer vision.”

How to Get Started with ResNet

To leverage the power of ResNet in your projects:

- Study the Original Paper: Read the original ResNet paper, “Deep Residual Learning for Image Recognition,” to understand the architecture and principles.

- Use Pretrained Models: Utilize pretrained ResNet models available in popular deep learning frameworks such as TensorFlow, PyTorch, and Keras.

- Experiment with Architectures: Experiment with different ResNet variants to find the best fit for your specific application or task.

- Join the Community: Engage with the deep learning community through forums and conferences to stay updated on the latest developments and applications of ResNet.

Conclusion

ResNet represents a significant advancement in the field of deep learning, enabling the training of very deep neural networks and achieving remarkable performance across a range of tasks. Its innovative use of residual connections has transformed the capabilities of AI and continues to influence the development of new architectures and technologies. Whether you are working on image classification, object detection, or generative models, ResNet offers a powerful foundation for achieving state-of-the-art results.